The massive use of cheaper synthetic data sets to train artificial intelligence generates an unexpected phenomenon that spoils the results

Artificial intelligence's worst enemy might be artificial intelligence itself. A study published in July 2023 by researchers at Rice and Stanford universities in the US States, has revealed that training AI models using synthetic data seems to cause their disintegration, at least in the long term. The term coined by the scholars to refer to this apparent “autoimmune” disorder in the software is MAD, an acronym for Model Autophagy Disorder. The paper raises serious questions about what might be the weakness of an already very widespread practice in machine learning.

Synthetic data comprises computer data sets that differ from traditional data, because it is generated artificially and not collected in a conventional manner. It is created to emulate the real world using artificial intelligence, and designed to train machine learning models. “Synthetic data is used in the development, testing and validation of machine learning services, where actual data is not available in the necessary amounts or does not exist”, explains the Spanish Data Protection Agency (AEPD). This data set may include images of non-existent human faces, texts written using algorithms, simulated financial data, artificial voice recordings and completely invented weather data. With the proliferation of businesses related to the development of AI, demand for synthetic data is growing. The consultancy firm Gartner estimates that by 2030, it will largely replace "real" data in the training of this type of software.

More availability, cheaper and free of copyright

In a world that requires more and more digital information to develop programs based on machine learning, the benefits of this solution are clear. Synthetic data do not have to be collected and are an optimal resource for creating very large databases. Furthermore, being completely free of copyright or privacy constraints, they avoid the legal issues faced by ChatGPT and other artificial intelligence models trained using massive quantities of information available online, a practice known as scraping.

In the article published by researchers, five training cycles with synthetic data were enough for the results of the examined AI model "to explode". According to the document, if you repeatedly train with this type of data, the machine begins to extract increasingly convergent and less varied content. The repetition of this process, the researchers explain, creates the "autophagy" cycle called MAD.

Data inbreeding

The problem arises from what we might call a kind of data inbreeding, which leads the software to produce increasingly unsatisfactory results. In fact, according to Jonathan Sadowski, researcher at Monash University in Melbourne (Australia), we are talking about “a system that is so trained in the results of other generative AI that it becomes an inbred mutant (...) with exaggerated and grotesque characteristics”. Hence the analogy with mad cow disease.

In addition, the data sets currently used to train general models mainly come from the internet. Therefore, many AI are already trained, without knowing it, with increasing amounts of synthetic data. This is the case with the popular Laion-5B data set, used to train state-of-the-art text-image models, such as Stable Diffusion, which contains a mixture of synthetic images already sampled by other models. The risk is to cause a phenomenon with exponential consequences.

The future, our best investment

Capitalize on opportunities in a way that is positive, conscientious and committed, with solutions designed for you by our investment experts in your bank in Switzerland.

The next AI

However, it is indisputable that synthetic data is a new frontier of artificial intelligence. The relationship between quality and convenience is incomparable, and not just for economic reasons. At the level of general trends, it is foreseeable that in 2024, AI will have a significant impact on human resources management, with automated candidate screening. It will also be increasingly central to cybersecurity, with algorithms that can quickly detect and neutralize sophisticated threats.

On the other hand, the generalized adoption of AI in decision-making processes will force users to ensure transparency, with the statement of the so-called explainable AI (XAI) that will allow users to understand and trust the results generated by algorithms. AI will also improve personalization of the user experience, for example through chatbots. Finally, it is estimated that the global market for artificial intelligence in the field of health will reach $188 billion by 2030. The hope is that the technology can reduce mistakes and improve the quality of care, with a positive impact on people's lives.

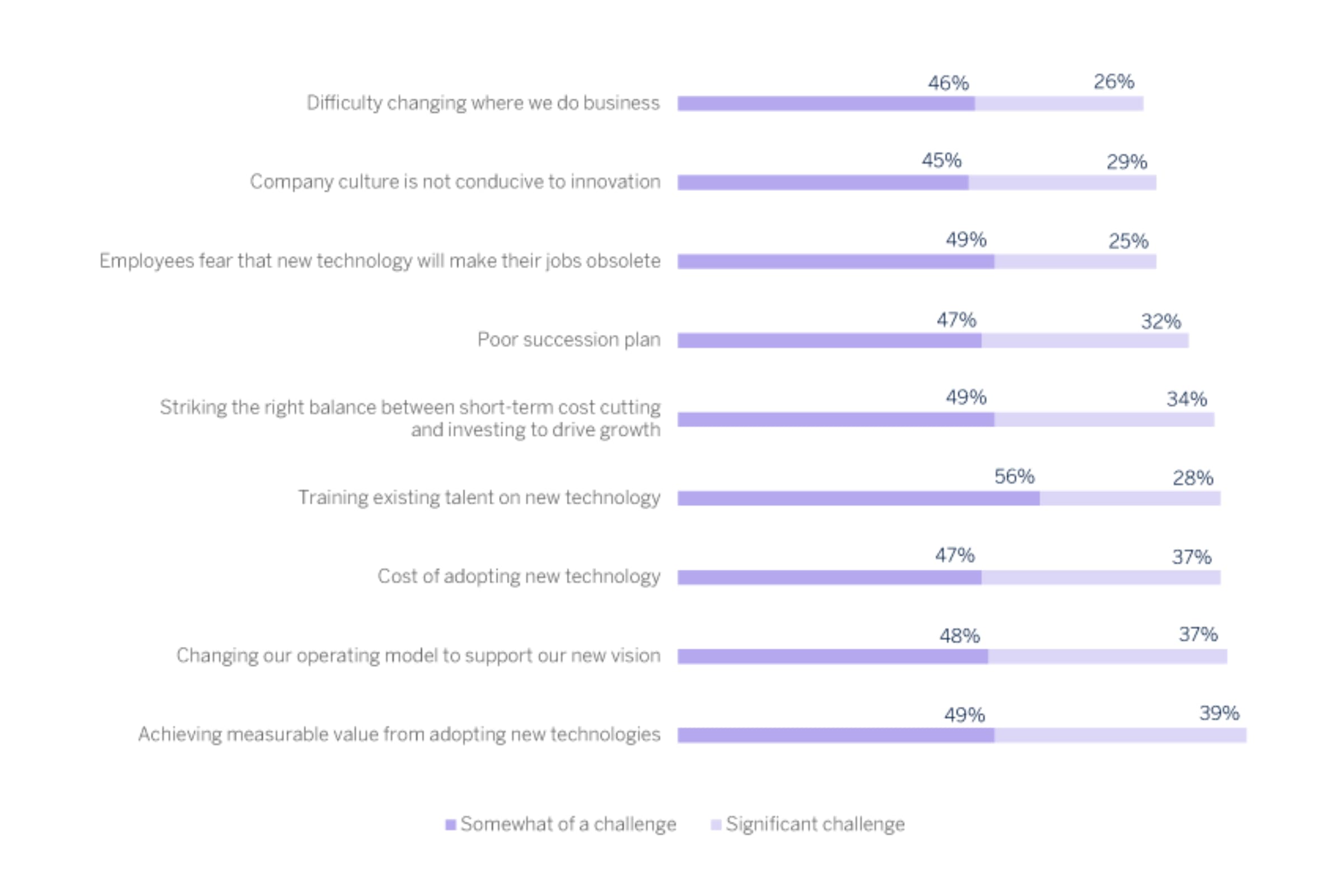

Challenges in the internal transformation of companies in the United States in 2023